DotNetNuke is a free, Open Source Framework ideal for creating Enterprise Web Applications.

DotNetNuke is an open source web application framework ideal for creating, deploying and managing interactive web, intranet and extranet sites.

DotNetNuke is designed to make it easy for users to manage all aspects of their projects. Site wizards, help icons, and a well-researched user interface allow universal ease-of-operation.

DotNetNuke can support multiple portals or sites off of one install. In dividing administrative options between host level and individual portal level, DotNetNuke allows administrators to manage any number of sites – each with their own look and identity – all off one hosting account.

DotNetNuke comes loaded with a set of built-in tools that provide powerful pieces of functionality. Site hosting, design, content, security, and membership options are easily managed and customized through these tools.

DotNetNuke is supported by its Core Team of developers and a dedicated international community. Through user groups, online forums, resource portals and a network of companies who specialize in DNN®, support is always close at hand.

DotNetNuke can be up-and-running within minutes. One must simply download the software from DotNetNuke.com, and follow the installation instructions. In addition, many hosting companies offer free installation of the DotNetNuke application with their plans.

DotNetNuke includes a multi-language localization feature which allows administrators to easily translate their projects and portals into any language. And with an international group of hosts and developers working with DotNetNuke, familiar support is always close at hand.

DotNetNuke is provided free, as open-source software, and licensed under a standard BSD agreement. It allows individuals to do whatever they wish with the application framework, both commercially and non-commercially, with the simple requirement of giving credit back to the DotNetNuke project community.

DotNetNuke provides users with an opportunity to learn best-practice development skills — module creation, module packaging, debugging methods, etc — all while utilizing cutting-edge technologies like ASP.NET 2.0, Visual Web Developer (VWD), Visual Studio 2005 and SQL Server 2005 Express.

DotNetNuke is able to create the most complex content management systems entirely with its built-in features, yet also allows administrators to work effectively with add-ons, third party assemblies, and custom tools. DNN modules and skins are easy to find, purchase, or build. Site customization and functionality are limitless.

NUnit

NUnit framework is port of JUnit framework from java and Extreme Programming (XP). This is an open source product. You can download it from http://www.nunit.org. The NUnit framework is developed from ground up to make use of .NET framework functionalities. It loads test assemblies in separate application domain hence we can test an application without restarting the NUnit test tools. The NUnit further watches a file/assembly change events and reload it as soon as they are changed. With these features in hand a developer can perform develop and test cycles sides by side. Before we dig deeper, we should understand what NUnit Framework is not:

-

It is not Automated GUI tester.

-

It is not a scripting language, all test are written in .NET supported language e.g. C#, VC, VB.NET, J# etc.

-

It is not a benchmark tool.

-

Passing the entire unit test suite does not mean software is production ready.

-

Implementing the Test

You can write the test anywhere you like, for example:

-

A test method in application code class, you can use #if-#endif directives to include/exclude the code.

-

A test class in application assembly, or

-

A separate test assembly.

I recommend implementing all the tests in the separate assembly because a unit test is related to quality assurance of the product, a separate aspect. Implementing unit test within the main assembly not only bloats the actual code, it will also create additional dependencies to NUnit.Framework. Secondly, in a multi-team environment, a separate unit test assembly provides the ease of addition and management. Consider we want to write a simple Calculator class with four methods, which take two operands and perform basic arithmetic operations like addition, subtraction, multiplication and division. The code below defines the skeleton of a typical test class.

using System;

using NUnit.Framework;

namespace UnitTestApplication.UnitTests

{

[TestFixture()]

publicclass Calculator_UnitTest

{

private UnitTestApplication.Calculator calculator = new Calculator();

[SetUp()]

publicvoid Init()

{

// some code here, that need to be run

// at the start of every test case.

}

[TearDown()]

publicvoid Clean()

{

// code that will be called after each Test case

}

[Test]

publicvoid Test()

{

}

}

}

Things to note are:

Import NUnit.Framework namespace.

The Test class should be decorated with TestFixture attribute.

The class should have standard constructor.

The class can have optional functions decorated with SetUpAttribute and TearDownAttribute. The method, decorated with SetUpAttribute is called before any test case method is called, whereas method decorated with TearDownAttribute is called after the execution of a test case method.

All the test method should have standard method signature as

public void [MethodName](){}

or

Public Sub [MethodName]

End Sub

Now that we know the skeleton of a test class, lets look at a typical test method:

[Test]

publicvoid Test_Add()

{

int result = calculator.Add(2, 2);

Assertion.AssertEquals(4, result);

}

The key line to note is Assertion.AssertEquals(4, result); Assertions are the way to test for fail-pass test. NUnit framework support following assertions:

Assert()

AssertEquals()

AssertNotNull()

AssertNotNull()

AssertNull()

AssertSame()

Fail()

You can use as many Assert statements in a method as you like. However, NUnit framework, will show a method as failed if even a single assertion fails, as expected. But what is important to remember is that if first assertion fails, next assertion will not be evaluated, hence you will have no knowledge about next assertion. Therefore it is recommended that there should only be one Assertion statement per test method. If you believe there should be more than one statement, create a separate test case method.

What Should Be Tested?

This is a common and valid question. Typical test cases are:

Test for boundary conditions, e.g. our Calculator class only multiply signed integers, we can write a test for multiplying two big numbers and make sure our application code handles it.

Test for both success and failure.

Test for general functionality.

Introduction

Whitepaper: Integrating Telephony Services into .NET Applications

Sponsored by Avaya

Learn how developers using Microsoft’s .NET framework can use SIP Objects.NET to gain simple and flexible access to telephony networks. SIP Objects.NET enables developers to access a wide variety of enterprise or traditional carrier networks by leveraging technologies such as Avaya’s SIP Application Server. »

Download: Iron Speed Designer .NET Application Generator

Sponsored by Iron Speed

Iron Speed Designer is an application generator that builds database, reporting, and forms applications for .NET. Quickly create visually stunning, feature-complete Web applications with database access and security. Iron Speed Designer accelerates development by eliminating routine infrastructure programming, giving you ready-to-deploy N- tier Web applications in minutes. »

Download: SQL Anywhere Developer Edition

Sponsored by Sybase

SQL Anywhere provides data management and exchange technologies designed for database-powered applications that operate in frontline environments without onsite IT support. SQL Anywhere is offered at no cost for development and testing. Register before the 60 day evaluation expires to continue using the product free of charge for development and testing, without a time limit.»

Whitepaper: Building a Foundation for SIP

Sponsored by Avaya

This whitepaper describes SIP from both business and technical perspectives. Read about how SIP can improve internal and external communications, as well as the basics of how SIP technology works, and how to build a SIP environment. »

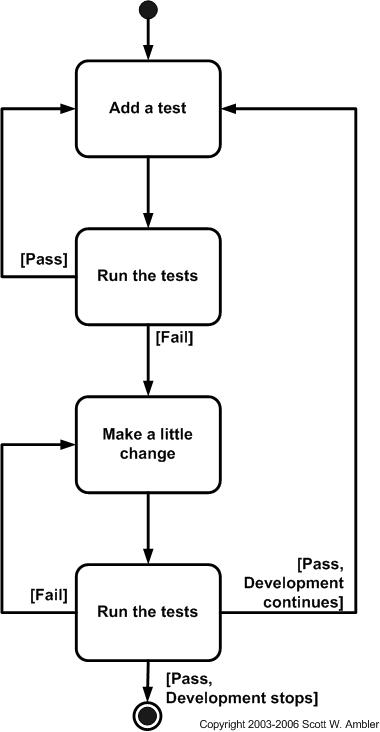

Usually, software testing is done at the end of the software development cycle and is relegated to a less creative testing department, hence making software testing a low priority in the development process. This approach results in error detection at the very end and sometimes even by the customer.

In reality testing is much easier than its reputation. As a matter of fact, testing is much fun because it results in better design and cleaner code. The time spent specifying test cases quickly pay off, you can define a series of test cases once and you can use them again and again. This means that regardless of looming deadlines, the developer always has a complete test suite at hand. This saves invaluable time when integrating new functions and brings a significant competitive advantage for both new and further development.

What Are Unit Tests?

A unit test is nothing more than the code wrapper around the application code that permits test tools to execute them for fail-pass conditions.

Why Should You Use Unit Tests?

Forget for a moment that there is something called XP (Extreme Programming) that coined the Unit Test term. The most of the projects developed today are always under tight development schedules and usually have only its developers as the tester of their code. By writing the unit tests themselves they can have a head start towards bug-free and quality code.

One will argue that if the developer is writing all the unit tests, it is quite possible to get the set of unit tests that are passable, because these unit tests are developed based either on the foreknowledge of application code or the assumptions made in the application code. However, do not be fooled with this, imagine what will happen if developer decides to change the application, her old test cases will break. That will force her to either re-think her changes or re-write the unit tests.

The application architect or analyst can write all the unit test cases upfront (Not what XP recommend, but we are not worried about it) and test the developed code against these cases and functionalities. The advantage is well defined deliverable for the developer and more quantifiable progress. A developer can also use this to disciple their work habits e.g. she can write a set of unit test that she wants to accomplish in a days work. Once tests ready, she can start developing the application and check her progress against the unit test. Now she has a meter to check her progress.

What is the NUnit Framework?

NUnit framework is port of JUnit framework from java and Extreme Programming (XP). This is an open source product. You can download it from http://www.nunit.org. The NUnit framework is developed from ground up to make use of .NET framework functionalities. It uses an Attribute based programming model. It loads test assemblies in separate application domain hence we can test an application without restarting the NUnit test tools. The NUnit further watches a file/assembly change events and reload it as soon as they are changed. With these features in hand a developer can perform develop and test cycles sides by side.

Before we dig deeper, we should understand what NUnit Framework is not:

It is not Automated GUI tester.

It is not a scripting language, all test are written in .NET supported language e.g. C#, VC, VB.NET, J# etc.

It is not a benchmark tool.

Passing the entire unit test suite does not mean software is production ready.

Implementing the Test

You can write the test anywhere you like, for example:

A test method in application code class, you can use #if-#endif directives to include/exclude the code.

A test class in application assembly, or

A separate test assembly.

I recommend implementing all the tests in the separate assembly because a unit test is related to quality assurance of the product, a separate aspect.

Implementing unit test within the main assembly not only bloats the actual code, it will also create additional dependencies to NUnit.Framework. Secondly, in a multi-team environment, a separate unit test assembly provides the ease of addition and management.

A standard naming convention will also help in further developing the test suite library for your application. We will discuss this in detail in coming section. For now, let create our first test assembly.

Consider we want to write a simple Calculator class with four methods, which take two operands and perform basic arithmetic operations like addition, subtraction, multiplication and division. The code below defines the skeleton of a typical test class.

using System;

using NUnit.Framework;

namespace UnitTestApplication.UnitTests

{

[TestFixture()]

publicclass Calculator_UnitTest

{

private UnitTestApplication.Calculator calculator = new Calculator();

[SetUp()]

publicvoid Init()

{

// some code here, that need to be run

// at the start of every test case.

}

[TearDown()]

publicvoid Clean()

{

// code that will be called after each Test case

}

[Test]

publicvoid Test()

{

}

}

}

Things to note are:

Import NUnit.Framework namespace.

The Test class should be decorated with TestFixture attribute.

The class should have standard constructor.

The class can have optional functions decorated with SetUpAttribute and TearDownAttribute. The method, decorated with SetUpAttribute is called before any test case method is called, whereas method decorated with TearDownAttribute is called after the execution of a test case method.

All the test method should have standard method signature as

public void [MethodName](){}

or

Public Sub [MethodName]

End Sub

Now that we know the skeleton of a test class, lets look at a typical test method:

[Test]

publicvoid Test_Add()

{

int result = calculator.Add(2, 2);

Assertion.AssertEquals(4, result);

}

The key line to note is Assertion.AssertEquals(4, result); Assertions are the way to test for fail-pass test. NUnit framework support following assertions:

Assert()

AssertEquals()

AssertNotNull()

AssertNotNull()

AssertNull()

AssertSame()

Fail()

You can use as many Assert statements in a method as you like. However, NUnit framework, will show a method as failed if even a single assertion fails, as expected. But what is important to remember is that if first assertion fails, next assertion will not be evaluated, hence you will have no knowledge about next assertion. Therefore it is recommended that there should only be one Assertion statement per test method. If you believe there should be more than one statement, create a separate test case method.

What Should Be Tested?

This is a common and valid question. Typical test cases are:

Test for boundary conditions, e.g. our Calculator class only multiply signed integers, we can write a test for multiplying two big numbers and make sure our application code handles it.

Test for both success and failure.

Test for general functionality.

The code below shows a typical boundary condition test for our Calculator case:

[Test]

[ExpectedException(typeof(DivideByZeroException))]

publicvoid Test_DivideByZero()

{

int result = calculator.Divide(1, 0);

}

The key line of code to note here is; ExpectedExceptionAttribute and the way boundary conditions are checked. Well this seems quite obvious because .NET Framework takes care of it for us, but point here is to see how to implement. We can use the same technique to test boundary conditions for our methods.

We should always write a failure test case e.g. Consider the following test case:

[Test]

publicvoid Test_AddFailure()

{

int result = calculator.Add(2, 2);

Assertion.Assert(result != 1);

}

It seems like a stupid case, but look at the following implementation of Add method:

publicint Add(int a, int b)

{

return a/b;

}

Quite an obvious error, possibly a TYPO, but remember, there is no room for typos in coding.

Some Tips and Tricks

Using VS Debugger with NUnit Framework

NUnit Framework, load and run the assemblies in separate AppDomain therefore you cannot use the debugging features of Visual Studio.

However, you can attach any external program to VS to consume an assembly. Follow these steps:

Set your test case assembly as Startup project.

Get to the property sheet for your test case assembly and set the Debug mode property to “Program”.

This property is set per project and persisted in project file. You will not get Star Application property as writeable. You need to press “Apply” button after setting Debug Mode property.

Set Start application to nunit-gui.exe that will be in bin folder of NUnit Framework folder.

You can define the command line argument for nunit-gui.exe. Alternatively, you can first start nunit-gui.exe externally and open your test case assembly, run it once and save it. Nunit-gui.exe will persist the last open file and will open it when run from visual studio.

With this you can run and debug your application code with great features provided by the Visual Studio.

Using Configuration Files:

Using Configuration files with NUnit is a tricky business. However, once you know where to put the configuration file, it is a piece of cake. Remember, NUnit creates a separate AppDomain to load the test case assembly i.e. our configuration file should be in the working folder of this AppDomain and its name should be [TestCaseAssemblyName].dll.config. For a typical scenario, this folder will be Test case assembly project’s subfolder named “bin”. Once this knowledge in hand, you can pass al your configuration settings in this configuration file and every thing will work like magic.

Here is my demo configuration file and associated test case:

<?xmlversion=”1.0″encoding=”utf-8″?>

<configuration>

<appSettings>

<addkey=”test”value=”FirstTest”/>

</appSettings>

</configuration>

and Test Case:

[Test]

publicvoid Test_Configuration()

{

string test = System.Configuration.ConfigurationSettings.AppSettings[“test”];

Console.WriteLine(test);

Assertion.AssertEquals(test, “MyTest”);

}

On a side note, look at this line of code Console.WriteLine(test); The nunit-gui.exe is a smart GUI that capture all the console output and present them in its “on tab frame”. You can use this simple trick for quick debugging. Use Factory Pattern Use factory pattern to define tests that uses different input values for same functionalities.

Clean Cache

The NUnit Framework caches the test case assembly information at “C:\Documents and Settings\\Local Settings\Temp\nunit20\” It is a good idea to clean this cache periodically, especially if you are running huge base of test cases.

The Naming Convention and Standards

As discussed above all test cases should go in a separate assembly. A suggested name for such assembly is [CodeAssembly].UnitTests.dll e.g.

UnitTestApplication.UnitTests.dll

You should follow the naming rule defined in .NET Framework SDK. A good tool to use is FxCop to force the naming convention.

This assembly should have at minimum one-to-one relation between methods and test methods.

Test case name should be Test_[MethodToBeTested][SomeAttribute]

e.g.

Test_Add,

Test_AddFailure.

I hope that with this information in hand you will be able to write better test cases, test fixtures and test suites that will result in not only your productivity increase, but will also create a discipline to produce bug less and efficient code.

Happy Programming!