I am using this amazing tool for couple of my current projects and it seems to be really a great tool and reduces lots of our development time and indeed increases the productivity a lot.

LLBLGen Pro generates a complete data-access tier and business objects tier for you (in C# or VB.NET), utilizing powerful O/R mapping technology and a flexible task-based code generator, allowing you to at least double your productivity!

LLBLGen Pro comes with a state-of-the-art visual O/R mapping designer to setup and manage your project.

LLBLGen Pro has a task-based code generator framework, which uses task-performing assemblies to perform task definitions, called task performers. One of the shipped task performers is a template parser/interpreter which produces code using the template set you specify. All customers have access to the SDK which contains the full source code for all shipped task performers, information how to produce your own task performers and, for example, how to extend the template language or write your own templates. Another task performer can handle templates written in C# / VB.NET and offers flexible access to every object in the loaded LLBLGen Pro project. Customers have access to our free Template Studio IDE for creating / editing / testing templates and templatesets.

It has four building blocks for your code: Entities, Typed Lists, Typed Views and Stored Procedure calls.

Entities, which are elements mapped onto tables or views in your catalog(s)/schema set. All, or a subset of the fields in a table or view are mapped on fields in an entity. An entity can be a subtype of another entity, through inheritance, and in which it derives from its supertype the supertypes fields, relations etc. Furthermore an entity has also fields mapped on its relations, so for example you can have the field ‘Orders’ mapped onto the relation Customer – Order in the entity ‘Customer’. Customer.Orders returns all Order entity objects in a collection filtered on that customer. Order.Customer returns a single customer entity object, related to that order entity. Entities and all the relations between them (1:1, 1:n, m:1 and m:n) are determined automatically from a catalog/schema set. You can add your own relations in the designer, for example to relate an entity mapped onto a table to an entity mapped onto a view or when there are no foreign keys defined in the database schema. You can map as much entities on a table/view as you want.

Typed Lists are read-only lists based on a subset of fields from one or more entities which have a relation (1:1, 1:n or m:1), for example a typed list CustomerOrder with a subset of the fields from the entities Customer and Order.

Typed Views are read-only view definitions which are 1:1 mapped on views in the catalog/schema set.

Stored Procedure calls are call definitions to existing stored procedures. This way you can embed existing stored procedures in your code and you don’t lose investments in current applications with stored procedures (SelfServicing) Per entity type an entity collection is defined, which sports a rich set of functionality to work with one or more entities of the entity type the collection is related to, directly.

Click here to download the 30 days trial version of the software.

Here is the steps to generate

Step 1 : Create an LLBLGen Pro project

I start with creating the LLBLGen Pro project first. It doesn’t really matter much, but as we don’t have to come back to the LLBLGen Pro designer after this step, it’s easier to do it as the first thing in this little project.

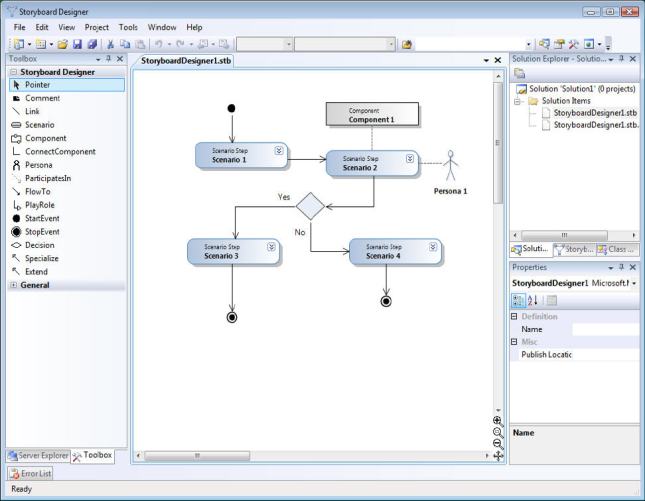

After we’ve created the project with a few clicks in the LLBLGen Pro designer, it looks something like this:

As we don’t need anything fancy, like inheritance, we can leave it at this and simply move on to step 2.

Step 2 : Generate the code

Generating code is also a simple procedure. Pressing F7, selecting some parameters to meet our goals for the code and we’re set. LLBLGen Pro v2.0 has a redesigned code generation setup mechanism, though as I’d like to keep it under wraps for now till the release date, to keep a bit of an advantage over the competition. For this article, the details about the code generation process aren’t that important anyway.

I’ve chosen for C# as my target language, .NET 2.0 as target platform and ‘adapter’ as the paradigm to use for my code. LLBLGen Pro supports two different persistence paradigms: SelfServicing (which has the persistence logic build into the entities, e.g. customer.Save()) and Adapter, which has the persistence logic placed in a separate class, the adapter, and which makes it possible to write code which targets multiple databases at the same time, and to work with the entities without seeing any persistence logic. This has the advantage to keep logic separated into different tiers for example (so GUI developers can’t make shortcuts to save an entity, bypassing the BL logic).

After the code’s been generated, which took about 4 seconds, it’s time to start Visual Studio.NET 2005 and start some serious application development!

Step 3 : Setting up the VS.NET project

In the folder in where I’ve generated my code, I’ve created a folder called ‘UdaiTest’ and have created a virtual directory on the Windows XP IIS installation’s default site called LLBLGenProTest which points to that Site folder. I load the two generated projects (Adapter uses two projects, one for database generic purposes, which can be shared among multiple database specific projects, and a database specific project) into VS.NET and create a new website using the vanilla ‘Your Folder Is The Website’-project type as it shipped with VS.NET 2005, using the file system.

After the references have been setup correctly, and I’ve added a web.config file to the website project.

In the above step generated database specific project, an app.Config file is generated which contains our connection string. I copy over the appSettings tag with the connection string to the web.config file of my site and it’s now ready for data-access.

Ok, everything is setup, without a single line of typing, and we’re now ready to create webforms which actually do something. On to step 4!

Step 4: Build a page that uses our LLBLGen Pro layer

As we’re working with entities, I think it’s a good opportunity to show off the LLBLGenProDataSource controls. In this step I’ve dragged an LLBLGenProDataSource2 control onto my form and opened its designer to configure it for this particular purpose. The ‘2’ isn’t a typo, the LLBLGenProDataSource control is used by SelfServicing, the LLBLGenProDataSource2 is used by Adapter. The ‘2’ suffix is used for adapter since the beginning, so to keep everything consistent with already familiar constructs, the ‘2’ is used for suffix here as well.

LLBLGen Pro’s datasource controls are really powerful controls.

using System;

using Northwind.EntityClasses;

using Northwind.HelperClasses;

using SD.LLBLGen.Pro.ORMSupportClasses;

public partial class _Default : System.Web.UI.Page

{

protected void Page_Load(object sender, EventArgs e)

{

if(!Page.IsPostBack)

{

// set initial filter and sorter for datasource control.

_customerDS.FilterToUse =

new RelationPredicateBucket(CustomerFields.Country == “INDIA”);

_customerDS.SorterToUse =

new SortExpression(CustomerFields.CompanyName | SortOperator.Ascending);

}

}

}

_customersDS is my LLBLGenProDataSource2 control, which is the datasource for my grid. Running this page will give the following results:

Not bad for 2 lines of code and some mousing in the designer. now let’s move on to something more serious, projections and subsets!

Step 5 : Data Shaping and Projections

In the world of today, we don’t have nice features like the things which come with Linq, as there are the anonymous types. So if we want to create a projection of a resultset onto something else, we either have to define the container class which will contain the projected data up-front or we’ve to store it in a generic container, like a DataTable. As we’re going to databind the resultset directly to a grid, a DataTable will do just fine here. Though, don’t feel sad, I’ll show you both methods here: one using the DataTable and one using the new LLBLGen Pro v2 projection technology which allows you to project any resultset onto any other construct using generic code.

DataTable approach

But first, the DataTable using approach. The query has two scalar queries inside the select list: one for the number of orders and one for the last order date. Because of these scalar queries, it’s obvious this data can’t be stored in an entity, as an entity is mapped onto tables or views, not dynamicly created resultsets. So in LLBLGen Pro you’ll use a dynamic list for this. This is a query, build from strongly typed objects which are the building blocks of the meta-data used by the O/R mapper core, and which resultset is stored inside a DataTable. This gives the following code. In the Page_Load handler, I’ve placed the following:

// create a dynamic list with in-list scalar subqueries

ResultsetFields fields = new ResultsetFields(6);

// define the fields in the select list, one for each slot.

fields.DefineField(CustomerFields.CustomerId, 0);

fields.DefineField(CustomerFields.CompanyName, 1);

fields.DefineField(CustomerFields.City, 2);

fields.DefineField(CustomerFields.Region, 3);

fields.DefineField(new EntityField2(“NumberOfOrders”,

new ScalarQueryExpression(OrderFields.OrderId.SetAggregateFunction( AggregateFunction.Count),

(CustomerFields.CustomerId == OrderFields.CustomerId))), 4);

fields.DefineField(new EntityField2(“LastOrderDate”,

new ScalarQueryExpression(OrderFields.OrderDate.SetAggregateFunction( AggregateFunction.Max),

(CustomerFields.CustomerId == OrderFields.CustomerId))), 5);

DataTable results = new DataTable();

using(DataAccessAdapter adapter = new DataAccessAdapter())

{

// fetch it, using a filter and a sort expression

adapter.FetchTypedList(fields, results,

new RelationPredicateBucket(CustomerFields.Country == “INDIA”), 0,

new SortExpression(CustomerFields.CompanyName | SortOperator.Ascending), true);

}

// bind it to the grid.

GridView1.DataSource = results;

GridView1.DataBind();

By now you might wonder how I’m able to use compile-time checked filter constructs and sortexpression constructs in vanilla .NET 2.0. LLBLGen Pro uses operator overloading for this, to have a compile-time checked way to formulate queries without the necessity of formulating a lot of code. A string-based query language is perhaps for some an alternative but it won’t be compile time checked, so if an entity’s name or a fieldname changes, the compiler won’t notice it and your code will break at runtime. With this mechanism it won’t as these name changes will be spotted by the compiler.

LLBLGen Pro v2’s projection approach

I promissed I’d also show a different approach, namely with projections. For this we first write our simple CustomerData class which will contain the 6 properties we’ve to store for each row. It’s as simple as this:

public class CustomerData

{

private string _customerId, _companyName, _city, _region;

private int _numberOfOrders;

private DateTime _lastOrderDate;

public string CustomerId

{

get { return _customerId; }

set { _customerId = value; }

}

public string CompanyName

{

get { return _companyName; }

set { _companyName = value; }

}

public string City

{

get { return _city; }

set { _city = value; }

}

public string Region

{

get { return _region; }

set { _region = value; }

}

public int NumberOfOrders

{

get { return _numberOfOrders; }

set { _numberOfOrders = value; }

}

public DateTime LastOrderDate

{

get { return _lastOrderDate; }

set { _lastOrderDate = value; }

}

}

For our query we simply use the same setup as we’ve used with the DataTable fetch, only now we’ll specify a projector and a set of projector definitions. We furthermore tell LLBLGen Pro to fetch the data as a projection, which means as much as that LLBLGen Pro will project the IDataReader directly onto the constructs passed in using the projector objects. As you can see below, this is generic code and it’s a standard approach which can be used in other contexts as well, for example by projecting in-memory entity collection data onto different constructs. The projectors are all defined through interfaces so you can create your own projection engines as well. One nice thing is that users will also be able to project stored procedure resultsets to whatever construct they might want to use, including entity classes. So fetching data with a stored procedure into a class, for example an entity, will be easy and straightforward as well.

Ok back to the topic at hand. The code to fetch and bind the resultset using custom classes looks as follows:

// create a dynamic list with in-list scalar subqueries

ResultsetFields fields = new ResultsetFields(6);

// define the fields in the select list, one for each slot.

fields.DefineField(CustomerFields.CustomerId, 0);

fields.DefineField(CustomerFields.CompanyName, 1);

fields.DefineField(CustomerFields.City, 2);

fields.DefineField(CustomerFields.Region, 3);

fields.DefineField(new EntityField2(“NumberOfOrders”,

new ScalarQueryExpression(OrderFields.OrderId.SetAggregateFunction( AggregateFunction.Count),

(CustomerFields.CustomerId == OrderFields.CustomerId))), 4);

fields.DefineField(new EntityField2(“LastOrderDate”,

new ScalarQueryExpression(OrderFields.OrderDate.SetAggregateFunction( AggregateFunction.Max),

(CustomerFields.CustomerId == OrderFields.CustomerId))), 5);

// the container the results will be stored in.

List<CustomerData> results = new List<CustomerData>();

// Define the projection.

DataProjectorToCustomClass<CustomerData> projector =

new DataProjectorToCustomClass<CustomerData>(results);

List<IDataValueProjector> valueProjectors = new List<IDataValueProjector>();

valueProjectors.Add(new DataValueProjector(“CustomerId”, 0, typeof(string)));

valueProjectors.Add(new DataValueProjector(“CompanyName”, 1, typeof(string)));

valueProjectors.Add(new DataValueProjector(“City”, 2, typeof(string)));

valueProjectors.Add(new DataValueProjector(“Region”, 3, typeof(string)));

valueProjectors.Add(new DataValueProjector(“NumberOfOrders”, 4, typeof(int)));

valueProjectors.Add(new DataValueProjector(“LastOrderDate”, 5, typeof(DateTime)));

using(DataAccessAdapter adapter = new DataAccessAdapter())

{

// let LLBLGen Pro fetch the data and directly project it into the List of custom classes

// by using the projections we’ve defined above.

adapter.FetchProjection(valueProjectors, projector, fields,

new RelationPredicateBucket(CustomerFields.Country == “USA”), 0,

new SortExpression(CustomerFields.CompanyName | SortOperator.Ascending),

true);

}

// bind it to the grid.

GridView1.DataSource = results;

GridView1.DataBind();

It’s a bit more code as you’ve to define the projections, but it at the same time has the nice aspect of having the data in a typed class (CustomerData) instead of a DataTable row.

Enjoy Programming

Will write more on this later…

You must be logged in to post a comment.